|

I wrote this article for YLEM, a really cool bay-area art/science organization, it appeared in Volume 20, Number 6.

Metaprogramming Emergent GraphicsHow can you write a computer program that doesn't get boring?I have had that oh-my-god feeling while looking at computer generated graphics. Sometimes it even lasts more than a few seconds. How can that feeling be sustained? Imagine you were given a limit of one megabyte disk storage for a digital artwork. How can you keep the user's attention? The beauty of any one clip of video or effect may entrance you, but without change - without evolution - we move on. Mere repetition of even the best content is tiring. Randomization produces change, but a difference that makes no difference (in the sense of Gregory Bateson) doesn't count. For example, pure white noise is always different, but is boring because although technically it contains many bits of information, those bits have no meaning. Software that produces random cut-up text (1) shows real change, but it impresses only until the same words and patterns reappear again and again. Except for those few moments when we get lucky, the stars align, and magically the machine speaks to us. How often does that happen? Can we increase it? Is it possible to change coordinate systems to increase the likelihood of these lucky moments of beauty? Yes. Any two artworks may be concatenated to form a larger work, but the information density remains unchanged unless there is a relationship between the works. The question is, how can we go super-linear? What kind of combination makes the whole greater than the sum of the parts? Typical artificial life systems have a mapping from genotypes to phenotypes. I think of this mapping as a language - a mapping from programs to their meanings. There is a space of potential artificial creatures, each of which is a program describing that creature's virtual structure and behavior. For example, Tom Ray's Tierra is principally a metaprogram that executes the programs comprising the current population of the virtual universe. A language can be defined either by an interpreter or a compiler. An interpreter is a metaprogram that reads a program as input, and traverses its structure and reduces it, performing the actions as it encounters them. A compiler is a metaprogram that reads a program, and translates it into the native language of the metaprogram. In practical programming terms, interpreters are slow to run and easy to write. Compilers produce fast programs, but themselves run slowly and are hard to write. A compiler accelerates execution of the genotypes by removing a layer of abstraction. The applicability of this essentially reductionist technique is a fascinating question. Can awareness be compiled? With eye-candy software, the objective is to create visual effects that absorb human attention. They do so by producing novelty - a sequence of surprises - from a fixed program. The information and meaning that keeps your attention is decompressed by running the program. Iteration and recombination with the right language produces a diversity of effects and forms. The trick is finding the language in which beautiful things are easy to say. That is, to design a typewriter so that even a few thousand monkeys have a shot at producing real poetry.

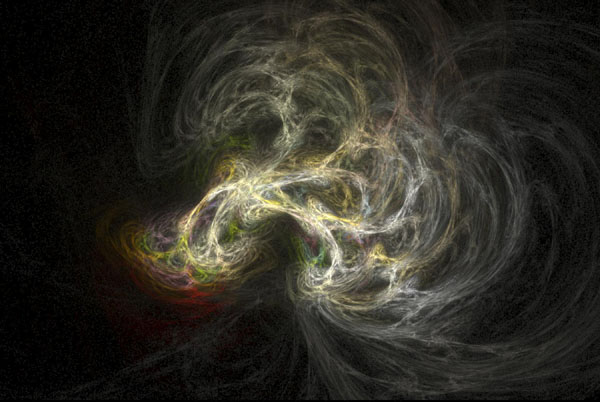

1. Flame, Fuse, and BombI build software that creates visual artworks and freely distribute both the software and the resulting graphics by the internet (2). This article describes three of these projects: Flame, Fuse, and Bomb. All three are iterated nonlinear systems in which higher-order patterns emerge.They appeal to the sensuous eye, use natural colors, texture, and motion. They maximize user interest per byte of storage without exhibiting any practical value. Bomb is a manifestation of the same vision that motivated my research in metaprogramming for graphics, which was about applying compiler generators to graphics. If the trick is to find the right language for mutation, then a tool for creating languages might be useful. There is an explosion of computation space opening up as price per flop approaches zero. These new spaces are a vacuum. The larger objective is to fill this these spaces with life. Flame was written in 1993, and is a two-dimensional iterated function system fractal renderer (it draws a histogram of a strange attractor). The implementation is carefully designed to produce images without any artifacts, revealing as much as possible of the information contained in the attractor. The conversion of the histogram into pixels is properly antialiased spatially and temporally (motion blur). Was it to make it beautiful or accurate?

|

|

|

|

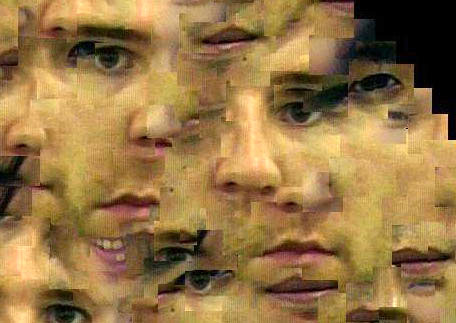

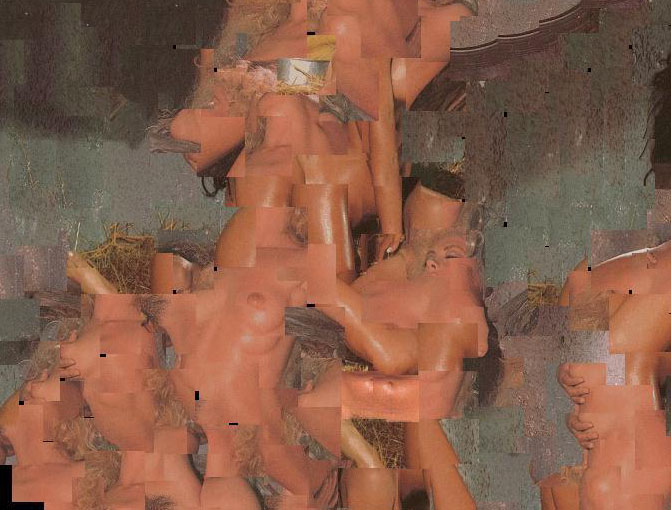

Figures 1 and 2 are examples of its output. It contains three innovations: 1) a set of seven special functions that increase the variety and character of the shapes, 2) it assigns brightness according to the logarithm of the density, rather than linearly, and 3) coloring is based on a third coordinate rather than density. The logarithm is essential because the dynamic range of densities in the histogram is very large and has features at all scales (they follow a power law). That is, in one part of the image there may be adjacent pixels, one that was hit 3 times and the other 9 times by the dynamic system, and in another part of the image there are pixels that are hit 300,000 and 900,000 times. A logarithm is the natural way to exhibit both differences simultaneously. Without it some parts of the fractal are either oversaturated or starved. Despite appearances, Flame images depict strictly two-dimensional objects. There is an illusion of three dimensions due to the logarithm: if a dense part of the attractor overlaps a thinner part, the brightness of the sum is nearly that of the dense part, so it looks as if one part occludes another. The coloring algorithm works by adding a third dimension to the chaotic system. The third coordinate determines the color. This reveals the internal structure of the attractor. The parameter space is a handful (2-5) of affine matrices (2x3) and the special function blending coefficients (7), for a total of 26-65 real dimensions. With so many dimensions and such a complex mapping it becomes almost impossible to design in that parameter space, instead you can explore it. Flame is the last of a series implementations of iterated function systems I did going back to 1986. Besides the batch renderer for linux that is available on my home page, Flame has been incorporated/ported into KPT5 (Kai's Power Tools), AfterEffects (by neosapien.net), the Gimp (a Photoshop-like program for Linux), and xscreensaver (the screen saver for linux). Fuse was also created in 1993. It takes any collection of input images (typically photography of nature or people), and produces an output image that is a resampling of the input. It performs associative image reconstruction. It is a visual analog of travesty-style(1) automatic text cutup programs. I found that photos of body parts made excellent inputs. Figures 3 and 4 are details of Fuse images. Figure 3 is from a combination of close-ups of the faces of myself and my friends. Figure 4 shows a result of reprocessing net.pornography: a sea of fleshapods.

|

|

|

|

The algorithm uses a Markov model of transitions between visual

features in the input, and then feeds noise into the model to generate

the output. The output image is built by copying samples of the input

to overlapping destinations, where the sample is chosen (by

associative search) to minimize the visual difference in the overlap

areas. The search may be accelerated by starting the search with

filtered low-resolution images, and finishing with full resolution.

The fuse code was has been rewritten a couple of times for different

image formats (eg 8 bit and 32 bit), but has otherwise been pretty

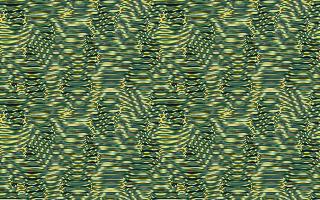

static. Bomb began in 1995 [oops, should be 1993 or 1994, -spot 2006] and has been ongoing since then, though no new major modes have been added since about the end of 1998. Bomb is a software system that produces visual music. It creates a video stream that is fluid, textured, rhythmic, animated, and generally non-representational. It uses multi-layered cellular automata, reaction-diffusion, an icon library, and a hodge-podge of special graphics hacks. It incorporates realtime versions of the Flame and Fuse algorithms as two of its modes. Three typical frames can be found in Figure 5.

|

|

|

|

|

The graphics may be influenced by an audio input. Control comes from

the keyboard, a sequencer such as Cycling74's Max, or Bomb has a

self-play mode where it manipulates its own parameters and responds to

the results. Bomb is a much larger part of my life than other

projects in terms of hours of labor and interaction, and is more

closely related to metaprogramming. Bomb has seen substantial growth

and input from other developers, including several complete ports. Flame and Bomb both use colormaps, that is, collections of colors numbered 0 to 255. Personally I am not a big fan of the usual saturated rainbow hued fractal and computer colors. In the spirit of open source, my colors are algorithmically derived from photographs of natural scenery and paintings by masters such as Monet, Van Gogh, and Klee. The algorithm heuristically sorts colors from the image so that colors with adjacent indices are similar visually (global minimization is impossible since it is the NP-complete traveling salesperson problem (3)).

2. MetaprogrammingMy research in metaprogramming aspires to enable programmers to manipulate real-time media streams in a flexible, high-level way by treating the transformations of the streams as a language, and then applying the techniques of compiler generation and partial evaluation to produce native code implementations of particular combinations of video effects. I started with the idea that partial evaluation could make a powerful and portable interface to runtime code generation, and ended up focusing on extending compiler generation techniques to work with the packed bit-level representations that are common in sound and video data.For example, say you have an effect you want to apply to an image. Naturally there is a loop over all pixels in the image, and some equation is applied to each neighborhood in the image. The effect looks good, but gets boring after a while. You add some options, or parameters to the effect, so now it holds your attention longer because you can vary the parameters, but the equation must now account for these parameters and do extra work decoding them. So the effect slows down. More variety requires more parameters. For example, consider a cellular automaton such as diffusion, in which at each iteration each pixel is averaged with its neighbors. This may be parameterized to allow using differently weighted averages of the neighbors of each cell, eg (N+S+E+W)/4 or (2N+2S+E+W+C)/7. The cellular automaton that is specialized to compute just one kind of diffusion runs faster than one that takes a vector with the five coefficients and can compute many different kinds. There is a natural trade-off between generality and performance. So with more parameters the effect runs even more slowly - if it runs too slowly then it no longer appears animated and it dies. In the quest for infinite variety you may go as far as to add a parameter that is a language, so the effect becomes a meta-effect with a parameter that describes the actual effect. But that requires invoking the interpreter for each pixel of the image - and testing the parameters at each pixel has the same results since the parameters are fixed for the whole image. Now the metaprogramming kicks in: it takes that parameterized pixel function and a particular parameter set and transforms them into native code that is then executed for each pixel. It factors out the repeated computation of decoding the parameters, leaving a residual program that is specialized to just one effect, but runs faster as a result. 3. MeaningFor me, it is certainly true that the only reason any algorithm is interesting is because of the image that it produces, but I produce more than images, I produce spaces of images. A program defines a mapping from parameter sets to images. The fundamental questions are, what fraction of the genotypes (parameter sets) are viable, and what is the diversity of the phenotypes (images)?The flame images on my web page and that i use to make prints are just demos - my personal favorites. They are advertisements or bait, meant to draw attention into the core. Bomb is a visual parasite.(4). I feel rewarded when someone looks at one of my pictures, but I get a larger feeling when someone uses my software to create their own pictures. And best of all is when someone tinkering with the source code improves the program, then disseminates their new version. It's difficult for me to lay claim to my images at all. They are all found objects. It is the nature of nonlinearity and emergence that something unexpected and unpredictable happens. When writing new modes for Bomb, or when picking new parameter sets for Flame, I work in a generate and test mode: try random parameter sets and save those that look good. I modify an existing programs, and keep the improvements Or in Bomb, I rarely knew in advance how a new cellular automaton would look. I just tried different combinations and equations until I found some that worked. This kind of creative process, generate and test, is the same as evolution itself, and I believe is the underlying mechanism of the more traditional "craft work" notion of creativity as well. Editing is a traditional form of creativity, and it too is based on generating a newer better smaller work from a larger, less well-structured body of text---the art of selection. How do you choose your screen saver? Why do you care?

Notes:

|

This work is licensed under a Creative Commons License by spot at draves dot org.